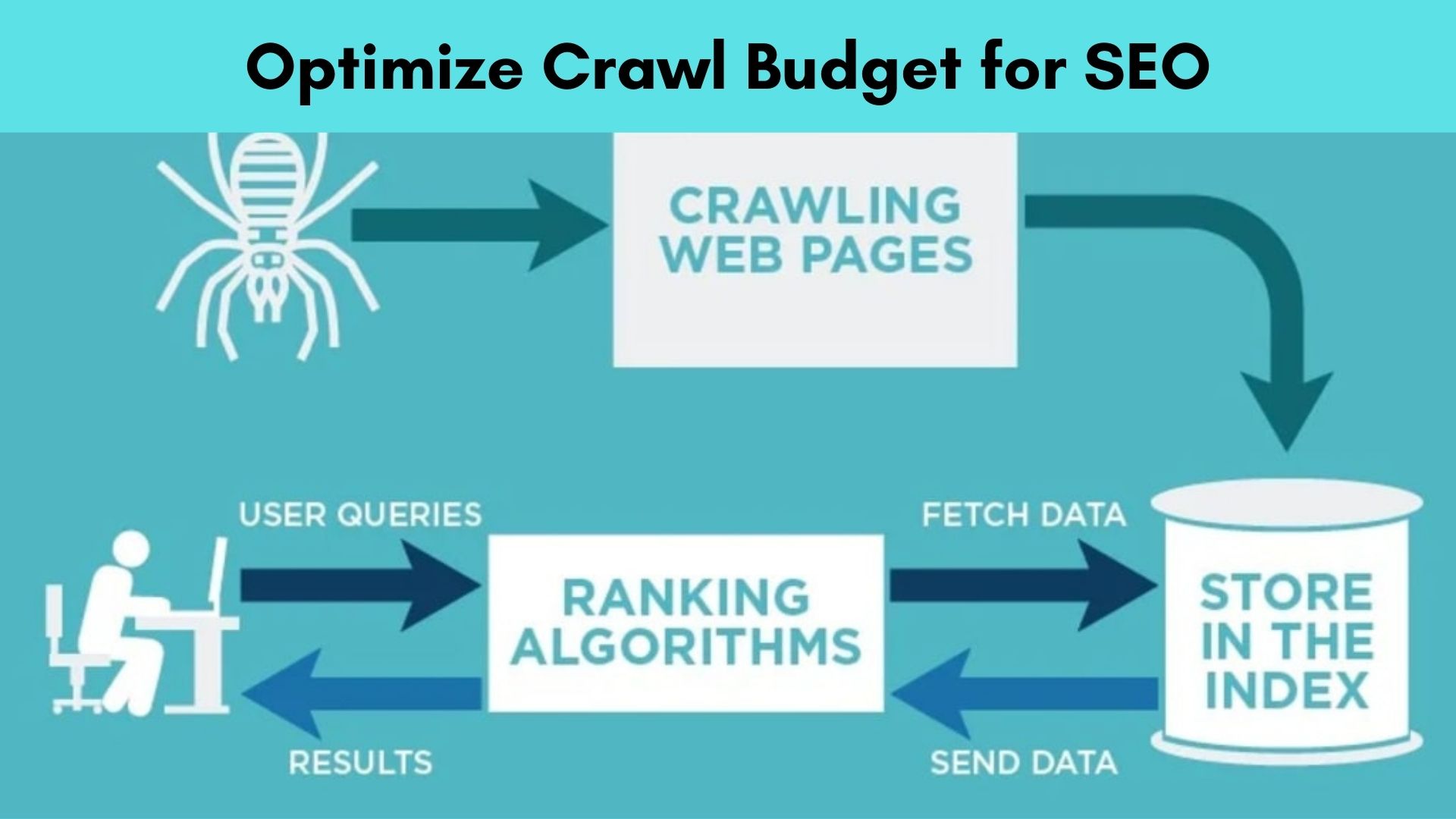

To find web page visibility in Google results, the crawl is important in indexation. Below is the list of seven actions that can help increase the SEO crawling budget.

What is a crawling budget?

Crawl Budget is the list of URLs at website that is crawl and indexed by search engine web crawlers and indexed over the SERP.

Google allocates a crawl budget for each website. By looking at the crawling budget, google bot determines the frequency of crawling pages.

Why is the Crawl Budget limited?

Crawl Budget is limited to ensuring that a website should not receive too many page requests for server services that could significantly affect user experience and site performance.

How to decide on a Crawl budget

To determine a website crawl budget, check out the crawl statistics section found in the Search Console account. If you need more information about search engine bots, you can analyze the log file to know how websites crawl and target.

How to Use the Crawl Budget

To find web page visibility in Google results, the crawl is important in indexation. Check out this list of seven actions that can help increase the SEO crawling budget Allow crawling of important pages in the Robot.txt file To ensure that relevant pages and content are clear, those pages should not be blocked in the robot.txt file. A Robot.txt file is used to prevent those files and folders from being indexed. This is the best way to capture a crawling budget on large websites.

Avoid long re-direction chains

If there is another 301-302 redirect available on the website, the search engine stops crawling for a while, without pointing to important pages. Due to multiple redirections, there is a waste of crawling the budget.

Ideally, redirection should be avoided altogether. However, it is unlikely that large websites will have a redirect index. The best way is to make sure you do not have more than one redirect, only when it is needed.

Manage URL parameters

An endless combination of URL parameters creates double variations of URLs to the same content. So crawling an unwanted URL parameter drains the crawl budget, triggers server loading, and reduces the likelihood of targeting relevant SEO pages. To save the crawl budget, it is suggested that Google be notified by adding the parameter URL parameters to the Google Search Console account under asset tools and the reporting section URL parameters.

Improve Site Speed

Improving site speed increases the chances of getting more pages crawled by Google's bot. Google claims that a high-speed site improves user experience, increasing crawling rates.

Simply put, a slow-moving website consumes an important crawling budget. But if an effort is made to improve site speed, pages will quickly load google bots with sufficient time to crawl and visit multiple pages.

By increasing the page speed of Ecommerce websites, most of the top e-commerce SEO services are striving to build an SEO strategy for concrete driving more traffic and improving the user experience to reduce the rate of decline.

Review the site map

The XML site map should contain the most important pages for google bots to visit and crawl web pages frequently. Therefore, it is necessary to keep the site map updated, without redirecting and errors.

HTTP Error Correction

Technically, broken links and server errors cost the crawl budget. So please take a moment and check your website which contains 404 and 503 errors and fix them as soon as possible.

To do this, you can view a report spread across the search console to determine if Google has detected any 404 errors. Downloading the entire URL list and analyzing 404 pages can be redirected to any similar or similar pages. If so, redirect 404 broken pages to new ones. In this case, it is recommended to use the screaming frog and SE rate. Both are effective website testing tools to cover SEO checklist points.

Internal links

Google Bots always prioritize crawling URLs with a large number of internal links pointing to them. Internal links allow Google bots to find the various types of pages available on the site that they need to target to be visible in the Google SERPs. Internal links are one of the key features of SEO Trends 2020. This helps Google understand website design to explore and navigate the site smoothly.

Conclusion:-

Improving website crawling and targeting is the same as making a website work. Companies providing SEO services in India always determine the value of a crawling budget while conducting SEO research services.

If your website is well maintained, or the site is very small, then there is no need to fear about the crawling budget. But in some cases like large websites, newly installed pages with a lot of redirects and errors, it is necessary to pay attention to how to use most of the crawling budget properly.

Analyzing crawling information reports, monitoring the crawl rate promptly can help assess whether there is a sudden rise or fall in the crawl rate.